About

This was a project done for project Moon Ranger at CMU

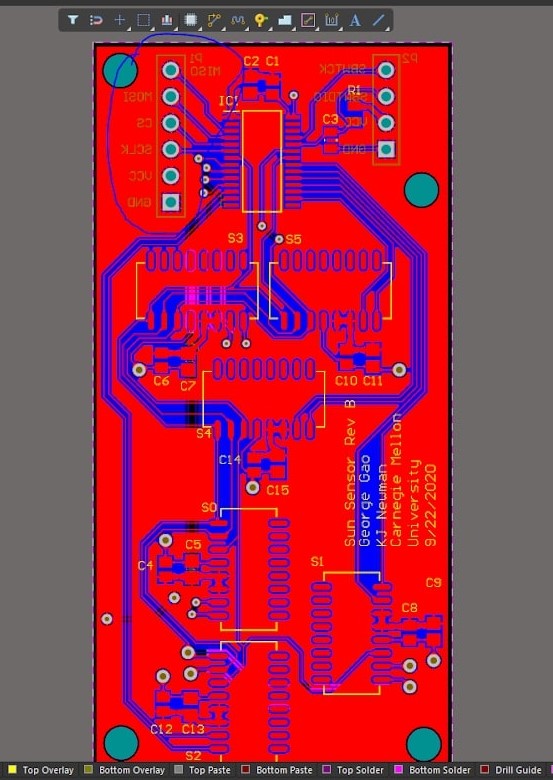

The PCB was designed over the course of the fall semester in

2020.

I iterated upon an existing altium design, which only had

1 photodiode array and utlizied a different MCU.

My final design included 6 photodiodes and a new 28 pin

MCU, a MSP430g2553.

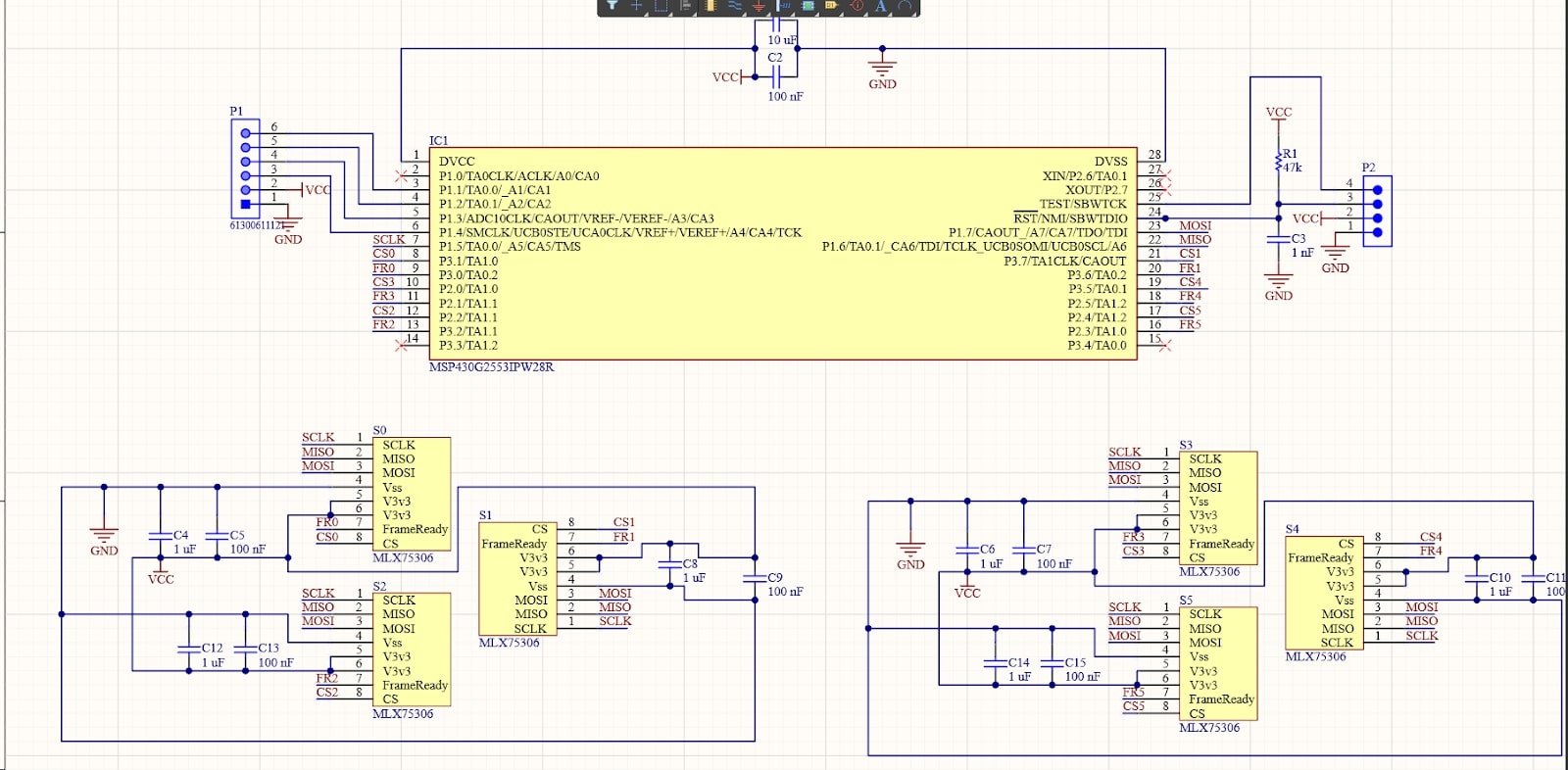

I began by designing the schematic to connect every pin, and make

sure that each of the photodiodes could communicate with the

MCU through SPI communications. As such, the MCU would be able

to communicate with each of the photodiodes through a CS pin, as well

as communicate with an external device through SPI as well, to pass

on readings from the photodiodes.

About

This was a smaller project done for build18 2021.

Our goal for the project was to create an autonomous foosball table,

capable of playing against real human players. The AI was trained

using a deep learning model through countless simulations in UNITY.

After which, the program was integrated with a pi cam, ample lighting,

and openCV during the sim-2-real transition. The pi cam

fed the state of the game to the program housed on a jetson nano.

Afterwhich, the nano would send instructions to an arudino that

controlled the stepper motors on the board, effectivley controlling

the players.

Prior to the start of the build-a-thon, the team spent several

week designing the project and part picking.

I was in charge more of the electrical sides, picking out the

angel lights, power supply as well as designing the way the foosball

players would be moved with stepper motors and belts and pulleys.

In the process of build week, I focused on sautering, wiring

motors, lights, the pi camera, and the power supply together.

I also designed and implemented the intial code for motor control by

the arduino as well as communications between a jetson nano, which

handled most of the heavy computation and the arduino.

Contributors: Edward Li, Omkar Savkar, George Gao, Kyle Newman,

Nick Alvarez, Gilbert Fan

Build18 Garage

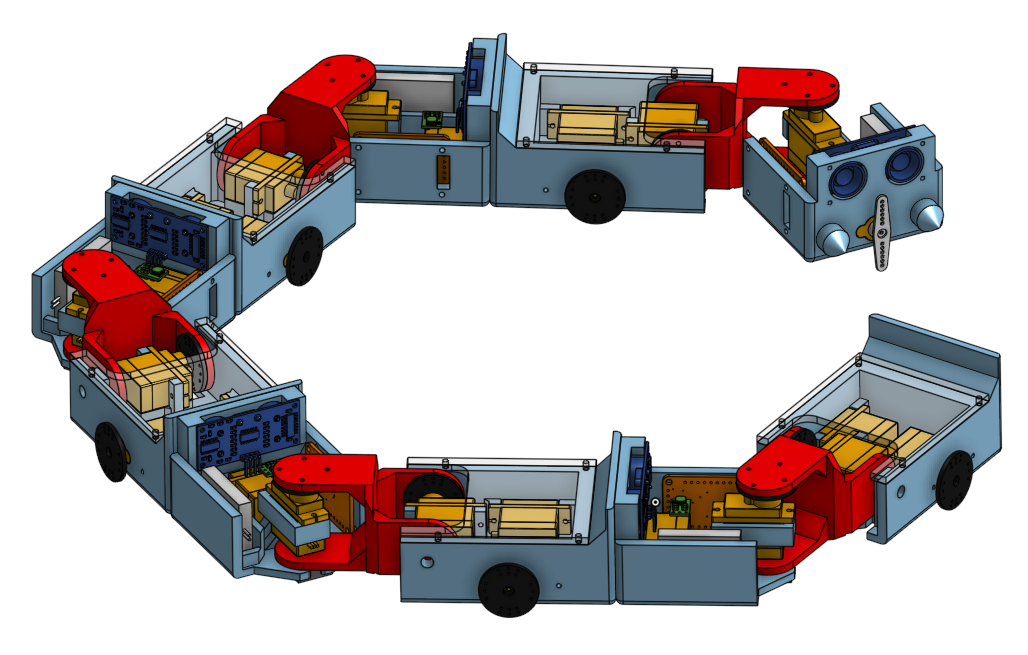

The above image showcases the final Cadded Image of the Robot.

About

This project was built for Build18 2020.

The project was built during the Build18 Hardware Hackathon

and won the Rockwell Automation and Builder's Choice Awards.

The purpose of it was a proof of concept of a modular robot

that can serve search and rescue function. The 4 robots,

(other than the color differences)

are built to connect in a modular manner. The modularity of the

robots serve the purpose of possibly overcoming obstacles in difficult

terrain during search-and-rescue, and also enable

the possibility of splitting up and covering more ground.

Furthermore, the modularity of the robots open up the possibility

of custom-fitting each seperate unit with different sensors

in order to serve different purposes. For example, while our project

only fitted one robot with an atmospheric and air-borne substances

detector, it is possible to attach different sensors to the other

units to detect additional things.

It was built with an ultra sonic sensor for navigation and to find

other units in order to link up, a small wifi-chip to communicate

with a computer, servos for movement, and a small microprocessor.

Finally, the models were 3D printed with PVC plastic.

Contributors: Edward Li, George Gao, Kyle Newman, Omkar Savkur.

Edward and I worked on cadding/designing the base model, with Edward

going on to help with the arduino code, whereas I finished up by

building the extrenous parts on the robots.

Kyle and Omkar did wonders by building most of the code for

the robot, wiring, and putting everything together.

About

This was a smaller project that I did for the class 24-101, Introduction to Mechanical Engineering. It was build following a preset given by the instructors of the class. However, given the preset, we still needed to build, code, and optimize our robot. In the process we built the given design in a coral draw file so that we could laser cut it out. We codded a basic algorithm, and uploaded it to a given arduino as the microprocessor of our robot. The Robot used an ultrasonic sensor in order to determine the final stop position, as well as a simple 3 input light sensor on the bottom of the robot. This was used to detect the line and path the robot was following. Contributors: George Gao, Francesco Fortunelli, Jacob Smith.